There’s been a huge amount of news lately about large language models, such as ChatGPT, and how they may impact medicine. From passing licensing exams to co-authoring papers, ChatGPT is rapidly being used in biomedical science applications — even as human-powered regulatory agencies and publishers scramble to understand the ethics and implications of this expansion. I am a medical student and researcher in the machine learning field, and I’ve been interested in this topic: As a future physician, how will artificial intelligence be used in my medical practice?

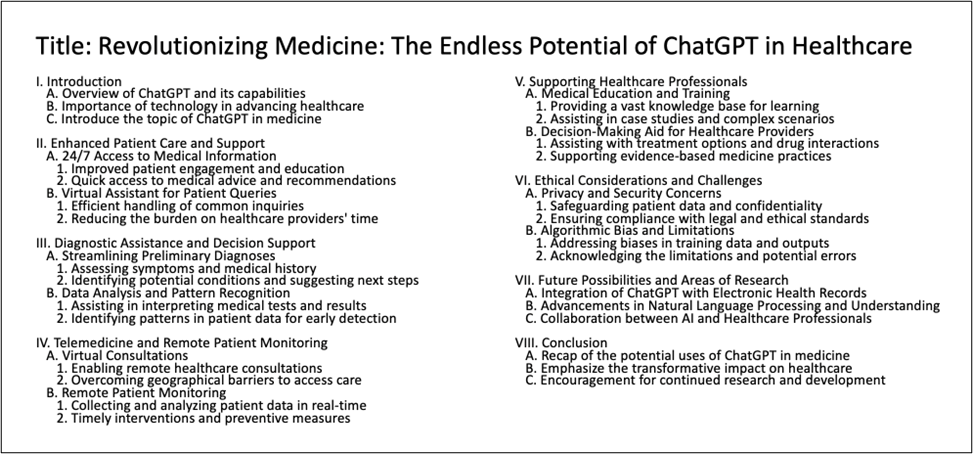

To get started, I decided to go straight to the source — I asked ChatGPT to “outline an article about the uses of ChatGPT in medicine.” It rose to the challenge quite admirably, offering a comprehensive list of possible applications and suggesting some potential ethical challenges, under the somewhat self-aggrandizing title, Revolutionizing Medicine: The Endless Potential of ChatGPT in Healthcare.

I agree completely that ChatGPT’s ability to summarize evidence and automate tasks may be huge assets in the clinic, though the privacy and legal aspects will pose challenges. I’m perhaps slightly less optimistic though about a few of the categories — I am not sure that ChatGPT is quite ready to handle some of the more complex care coordination tasks required of health care workers, or that its natural language processing technology is fully ready to interact with patients. In my upcoming blog posts, I’ll discuss these opportunities and limitations.

Today, I will demonstrate an example of how ChatGPT might help a medical student learn about the potential causes of illness in a patient and generate a differential diagnosis — drawing on my experiences as a medical student and AI researcher.

In medical school, one of the first things I learned was the importance of generating a comprehensive differential – or a full list of as many causes of illness as possible for each clinical scenario. It can be easy to jump into treating the most likely option, but it’s important to systematically consider all options — the most common and obvious causes, but also the very rare but life-threatening diagnoses or the unusual presentations of disease. This allows practitioner to then order the differential by priority and work down the list, systematically ruling out nonissues and narrowing in on a diagnosis that can be actively managed and treated.

As a medical student, one of the things I found challenging about this was casting a wide enough net. I could always think of one or two important potential diagnoses (often among those I’d just read about in class) and a few hot topics that you never want to miss and are always on the differential (i.e., cancer), but I sometimes struggled to come up with a comprehensive list, particularly when the patient’s symptoms were very broad and nonspecific. This is a scenario in which ChatGPT could be helpful. The scenario is entirely fictitious and does not involve a real patient — though I have tried to realistically model the way I approach differential diagnoses as a first-year medical student.

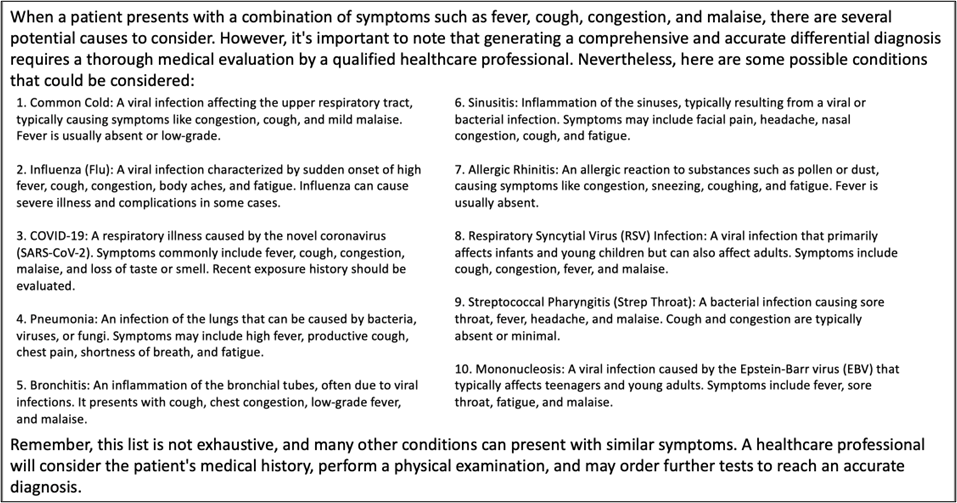

For example, I asked ChatGPT to “generate a differential diagnosis for a patient presenting with two days of fever, cough, congestion and malaise,” and I got back this fairly complete differential:

This differential has a wide range of potential infectious causes and mentions the possibility of allergies (rather than infection). When I asked ChatGPT a series of questions about this differential, it returned useful though very generalized information.

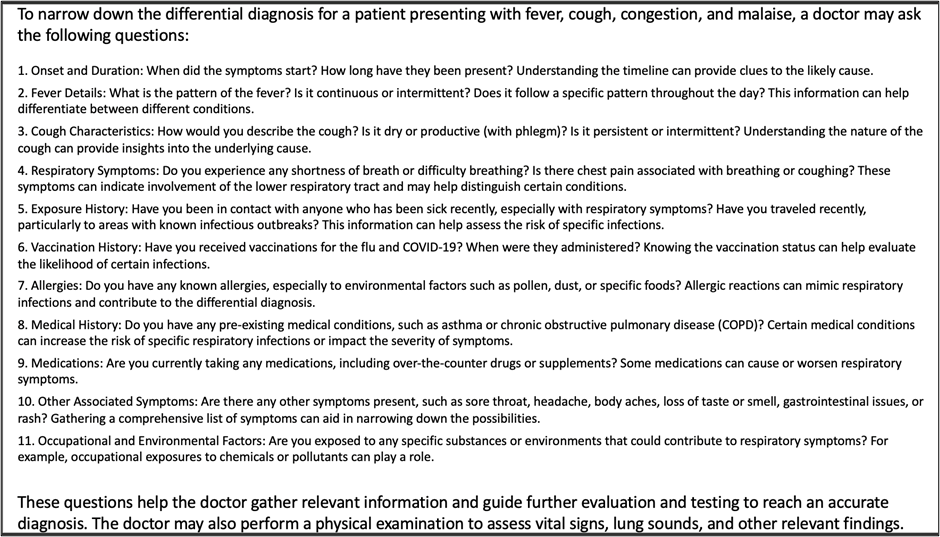

I was able to obtain information on how to proceed with this list by asking, “What questions should a doctor ask to narrow the differential?”

This answer suggested several questions about medical history and symptoms that would be beneficial to ask a patient. It also (correctly) suggested that a physical exam would be important beyond asking questions.

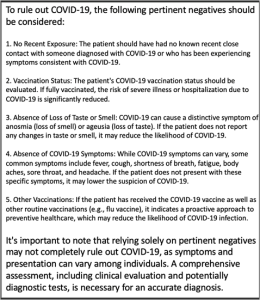

In this hypothetical scenario, I then imagined I had spoken with my patient and received valuable information. I was not very worried a COVID-19 diagnosis, but I wanted to double check my reasoning given that there had been a recent surge in cases. I asked ChatGPT, “What are the pertinent negatives to rule out COVID-19?” The reply was a list of symptoms that could be double checked to be more confident in proceeding with COVID-19 low on the differential.

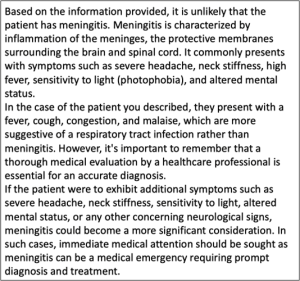

Finally, I asked ChatGPT, “Is it possible that this patient has meningitis?” This would be a medical emergency, but it was very unlikely — the only suggestive symptom the patient had was fever. ChatGPT responded appropriately, explaining that meningitis was unlikely due to other symptoms of the disease that the patient did not have, but it also cautioned about the importance of a full medical evaluation.

This was all useful information, albeit not substantially more detailed than what I could have found by consulting more traditional resources such as UpToDate. What I thought really set ChatGPT apart here was that it helped me organize my thoughts by ordering the differential and brainstorming questions needed to rule out certain diagnoses in a rapid fashion.

I also think ChatGPT was right to exercise caution by explicitly stating that its use was not a substitute for a careful physical exam and diagnostic labs. This is a crucial point for patient safety, and I think safeguards for the use of ChatGPT in patient care decisions will be extremely important. This was a purely hypothetical scenario — in a true clinic environment, it would not be safe or ethical to use ChatGPT’s logic rather than standard clinical workflows to determine a final diagnosis, or even to rule out diagnoses solely based on ChatGPT’s answers. In my opinion, though, ChatGPT could be useful as a tool to help brainstorm potential differential diagnoses and ensure that important possibilities are not left out, and to help students learn how to organize their differentials.

As informative as the above scenario was, I think it also raises important questions about privacy — how much information about a patient is OK to share with ChatGPT? In the hypothetical scenario above, what would have happened if I knew about a specific location the patient had traveled to that made a particular infectious agent more likely? Or what if the patient shared with me the location at which their child attended day-care and that there was a certain viral outbreak there that may have exposed the family? Would these be considered identifiable details? And would it be appropriate to share them with ChatGPT even if it would help narrow the differential diagnosis?

In the future, will patients need to consent to having a large language model read their medical record? In the absence of this consent, I argue that the responsible thing to do would be to integrate knowledge about a specific patient with more general knowledge summarized by ChatGPT — a useful, efficient tool but still far from a totally automated diagnosis!

Related Content

- Artificial Intelligence in Medicine—Science Fiction Now Science Fact

- Digitizing Diagnosis: A new book by fourth-year medical student Andrew Lea

- The State of Digital Health

Want to read more from the Johns Hopkins School of Medicine? Subscribe to the Biomedical Odyssey blog and receive new posts directly in your inbox.